Computer Vision for Self-Driving Motorcycles

by Harshit Aggrawal, Summer Intern SMLab

Computer vision (herein refered as cv) plays a crucial role in enabling self-driving abilities in motor vehicles. In this blog, we will explore how cv works and why it is essential in the development of self-driving motorcycles and autonomous driving (herein referred to as AD)

The topics we'll cover are:

I. The idea and components behind cv

II. The integration of feature extraction, data association and photogrammetry in Motor Vehicles

III. The challenges faced in CV for autonomous driving

What is Computer Vision

Before understanding CV, lets first understand Vision. What does it mean to have vision ? To have vision is the ability to acquire, process, analyse and understand things in the electromagnetic spectrum.

Thus we could say that CV can be extended from this definition to be vision for digital images. $^{1}$

In CV our goal becomes to extract high-level features corresponding understanding from digital images. We do this by multiple steps starting from extracting very low level features, to processing these low level features in-order to filter unnecessary features that end up increasing computational power. We then associate these low lovel features to form a more complete understanding of the images.

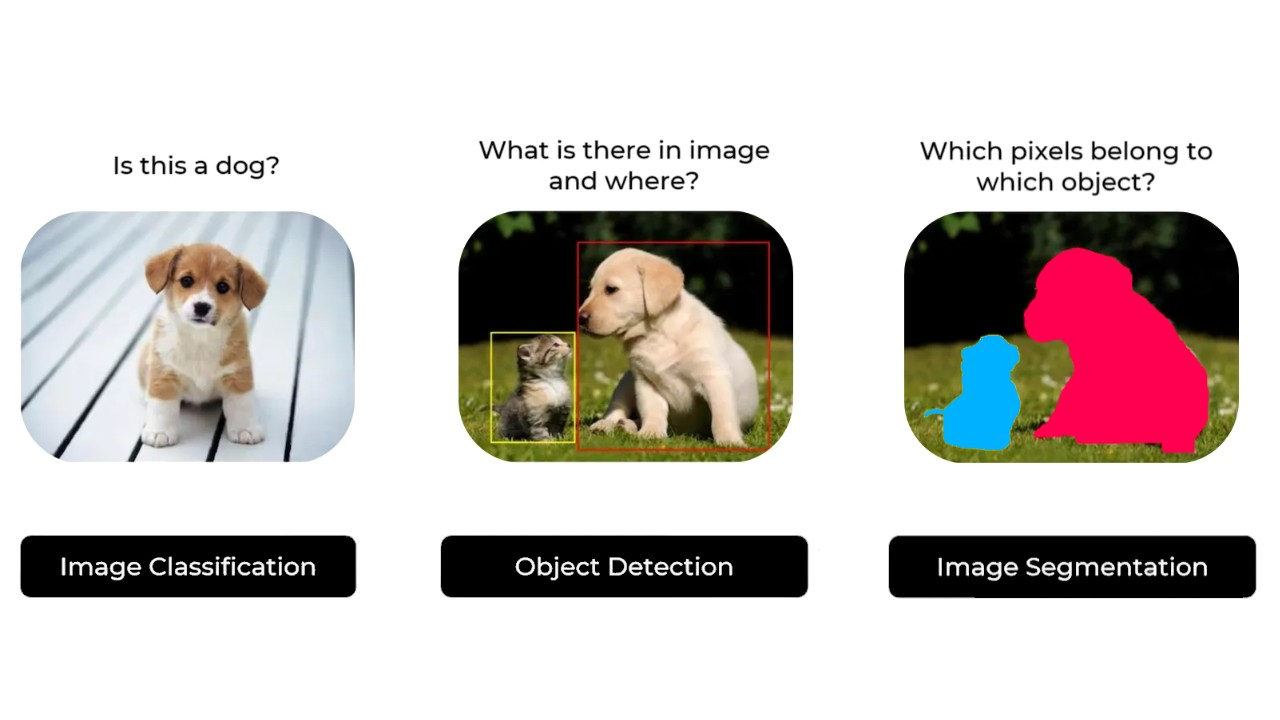

The understanding of images can generally be for the following three tasks: $^{2}$

- Segmentation: The task of being able to classify a particular set of pixels into an object.

- Classification: The task of detecting what sort of objects are in an image as a whole.

- Detection: The task of localizing an object in an image.

Brief introduction to Autonomous Driving

The problem of AD is essentially the ability to predict a robot or in this case, self driving motorcycle's trajectory with the digital images, and other sensor data acquired and analysed.

CV comes into play in AD in all of the steps involving processing of the images post acquisition through the LiDAR, RGBD and other camera sensors.

For reading more about how AD Works and the use of Predictions, refer to this link

Feature Extractions

Feature extraction is a crucial step in computer vision that involves identifying and describing distinctive patterns or keypoints in an image. These features serve as the basis for further analysis and understanding of the image content.

In the context of autonomous driving (AD), feature extraction plays a vital role in processing the images captured by sensors such as LiDAR, RGBD, and cameras. By extracting relevant features from these images, AD systems can detect and recognize objects, localize them in the environment, and make predictions about their trajectories.

By leveraging the following methods of feature extractions, AD systems can make sense of the environment, identify objects of interest, and make informed decisions based on the analyzed data:

Keypoints and Descriptors

SIFT

SIFT (Scale-Invariant Feature Transform) is a popular algorithm used for feature extraction in computer vision. It detects and describes keypoints in an image that are invariant to scale, rotation, and affine transformations. SIFT features are robust and can be used for various tasks such as image matching, object recognition, and image stitching.

BRISK

BRISK (Binary Robust Invariant Scalable Keypoints) is another feature extraction algorithm that is designed to be fast and efficient. It uses a binary descriptor to represent keypoints, making it suitable for real-time applications. BRISK features are robust to changes in lighting conditions and can handle large scale and rotation variations.

SURF

SURF (Speeded-Up Robust Features) is a feature extraction algorithm that is similar to SIFT but is faster and more efficient. It uses a combination of scale-space extrema and Haar wavelet responses to detect keypoints. SURF features are robust to changes in scale, rotation, and affine transformations, making them suitable for various computer vision tasks.

ORB

ORB (Oriented FAST and Rotated BRIEF) is a feature extraction algorithm that combines the speed of FAST (Features from Accelerated Segment Test) and the robustness of BRIEF (Binary Robust Independent Elementary Features). It detects keypoints using the FAST algorithm and computes a binary descriptor using the BRIEF algorithm. ORB features are fast to compute and can be used for real-time applications.

These different extracted features can now be used in order to make sense of the high level understanding.

Data Associations

Data associations in computer vision refer to the process of linking or associating data from different sources or frames to establish correspondences or relationships between them. In the context of autonomous driving, data associations play a crucial role in understanding the environment and making informed decisions.

One common application of data associations in autonomous driving is object tracking. By associating data from consecutive frames, such as bounding boxes or keypoints, the system can track the movement of objects over time. This information is essential for predicting object trajectories and ensuring the safety of the vehicle.

Another use case of data associations is in multi-sensor fusion. Autonomous vehicles often rely on multiple sensors, such as cameras, LiDAR, and radar, to perceive the environment. By associating data from these different sensors, the system can create a more comprehensive and accurate representation of the surroundings. This fusion of data enables better object detection, localization, and scene understanding.

Data associations can be challenging due to various factors, including occlusions, sensor noise, and object appearance changes. Robust algorithms and techniques, such as Kalman filters, particle filters, and graph-based methods, are commonly used to handle these challenges and establish reliable associations.

In summary, data associations are a fundamental aspect of computer vision in autonomous driving. They enable the system to track objects, fuse data from multiple sensors, and make informed decisions based on the analyzed information.

Photogrammetry

Photogrammetry

Photogrammetry is the science and technology of extracting geometric information from photographs or digital images. It involves capturing multiple images of an object or a scene from different angles and using these images to reconstruct the 3D structure and measurements of the subject.

The process of photogrammetry typically involves the following steps:

Image Acquisition: Multiple images of the object or scene are captured using cameras or other imaging devices. These images should cover the subject from different viewpoints and angles.

Camera Calibration: The intrinsic and extrinsic parameters of the camera(s) used for image acquisition need to be calibrated. This involves determining the camera's focal length, principal point, lens distortion, and the relationship between the camera coordinate system and the world coordinate system.

Feature Extraction: Keypoints or distinctive features are extracted from the images. These features can be points, edges, corners, or other visual patterns that can be reliably detected and matched across multiple images.

Feature Matching: The extracted features from different images are matched to establish correspondences. This is done by comparing the descriptors or characteristics of the features and finding the best matches based on similarity measures.

Triangulation: Using the matched features, the 3D positions of the corresponding points in the scene are estimated. This is done by triangulating the rays of light that pass through the camera centers and intersect with the matched features.

Bundle Adjustment: The estimated 3D positions of the points and the camera parameters are refined to minimize the reprojection errors. This optimization step ensures that the reconstructed 3D structure is consistent across all images and accurately represents the real-world scene.

Surface Reconstruction: The reconstructed 3D points are used to create a surface or mesh representation of the object or scene. This can be done using techniques such as Delaunay triangulation or voxel-based methods.

Texture Mapping: The captured images are projected onto the reconstructed surface to create a realistic textured 3D model. This step enhances the visual appearance of the reconstructed object or scene.

Photogrammetry pipelines can vary depending on the specific application and the available resources. Some pipelines may involve additional steps such as image preprocessing, image rectification, or dense point cloud generation. The mathematical principles underlying photogrammetry include concepts from geometry, linear algebra, optimization, and computer vision.

Overall, photogrammetry is a powerful technique that enables the creation of accurate 3D models from 2D images. It finds applications in various fields such as architecture, archaeology, virtual reality, and industrial metrology.

Note: We have covered Simultaneous Localisation and Mapping (SLAM) in our prediction blog.

Challenges faced

Handling Varied Lighting Conditions

One of the challenges in computer vision is handling varied lighting conditions. Different lighting conditions can affect the appearance of objects in an image, making it difficult to accurately detect and classify them. To address this challenge, computer vision algorithms need to be robust to changes in lighting and capable of adapting to different lighting conditions.

Object Detection and Classification

Another challenge in computer vision is object detection and classification. This involves accurately identifying and categorizing objects in an image. Object detection algorithms need to be able to locate objects of interest and classify them correctly. This task becomes more challenging when dealing with complex scenes, occlusions, and variations in object appearance.

Real-Time Processing

Real-time processing is a significant challenge in computer vision. Many applications, such as autonomous driving and surveillance systems, require real-time analysis of video streams. This means that computer vision algorithms need to be efficient and capable of processing large amounts of data in real-time. Optimizations, such as parallel processing and hardware acceleration, are often employed to achieve real-time performance.

Robustness to Environmental Factors

Computer vision algorithms need to be robust to environmental factors, such as noise, clutter, and variations in camera viewpoints. These factors can introduce uncertainties and make it challenging to accurately analyze and interpret visual data. Robustness to environmental factors involves developing algorithms that can handle these uncertainties and produce reliable results in various real-world scenarios.

Conclusion

In conclusion, feature extraction methods such as SIFT, BRISK, SURF, and ORB play a crucial role in computer vision systems. They enable the system to detect and describe keypoints in images, making them robust to scale, rotation, and affine transformations. These extracted features can be used for various tasks such as image matching, object recognition, and image stitching.

Data associations are essential in autonomous driving systems as they establish correspondences between data from different sources or frames. They enable object tracking, multi-sensor fusion, and better understanding of the environment.

Photogrammetry is a powerful technique for extracting geometric information from photographs or digital images. It involves capturing multiple images, calibrating cameras, extracting features, matching them, triangulating 3D positions, refining parameters, reconstructing surfaces, and mapping textures. Photogrammetry finds applications in architecture, archaeology, virtual reality, and industrial metrology.

Computer vision faces challenges such as handling varied lighting conditions, object detection and classification, real-time processing, and robustness to environmental factors. Overcoming these challenges requires robust algorithms that can adapt to different lighting conditions, accurately detect and classify objects, process data in real-time, and handle uncertainties in various scenarios.

In conclusion, computer vision is a rapidly evolving field with numerous applications and challenges. By leveraging feature extraction methods, data associations, and photogrammetry techniques, we can make significant advancements in various domains.